I have a ZFS pool (mirror) with two SATA disks on Solaris 11 running on my HP Microserver Gen8. Both of the disks are Toshiba 3T desktop disk, and they are more than 4 years old. The pool stores all my photos so I think I’d better add one more disk to back it up.

I purchased HP Disk (6G SATA Non-Hot Plug LFF (3.5-inch) Midline (MDL) Drives), which is recommended on Gen8’s Specs (628065-B21, https://www.hpe.com/h20195/v2/GetPDF.aspx/c04128132.pdf), and it comes with 1 year warranty.

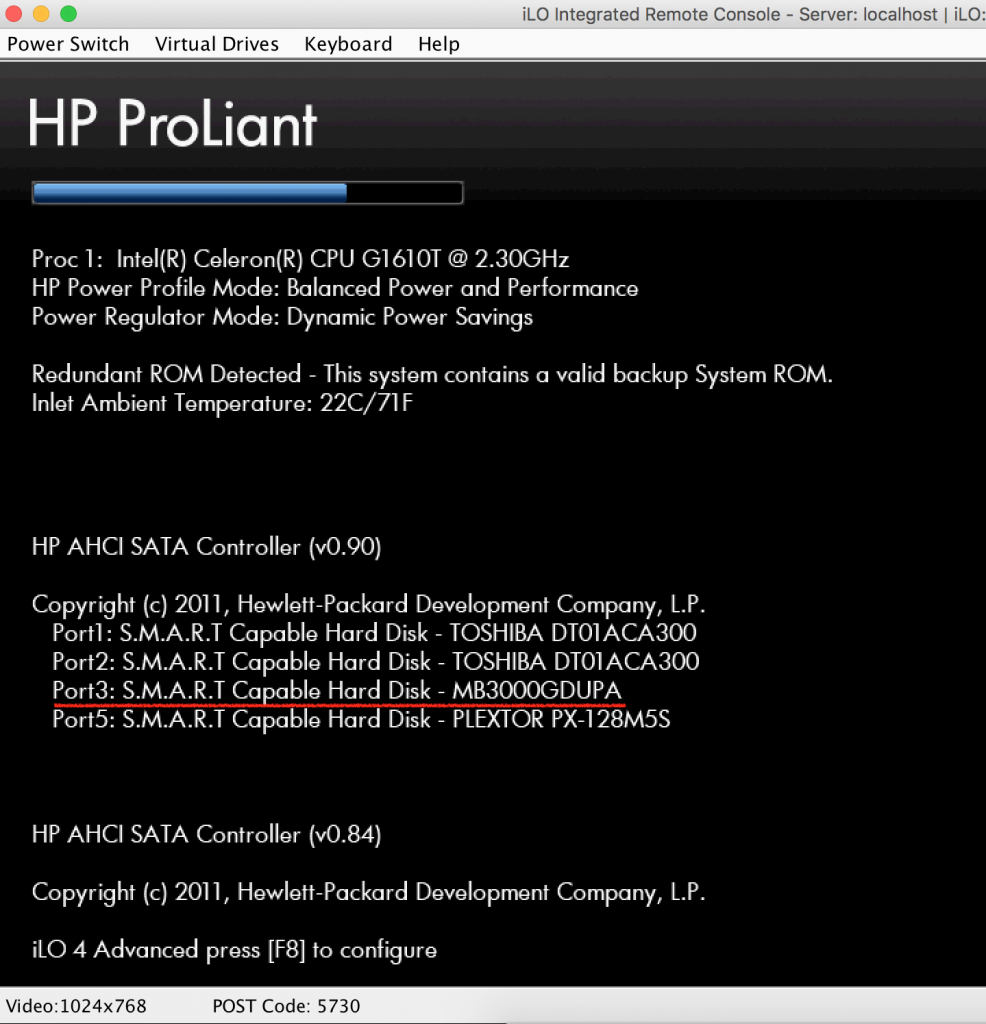

Insert the career into Gen8 and power it on, you can see from the POST screen that the new disk is detected by Gen8.

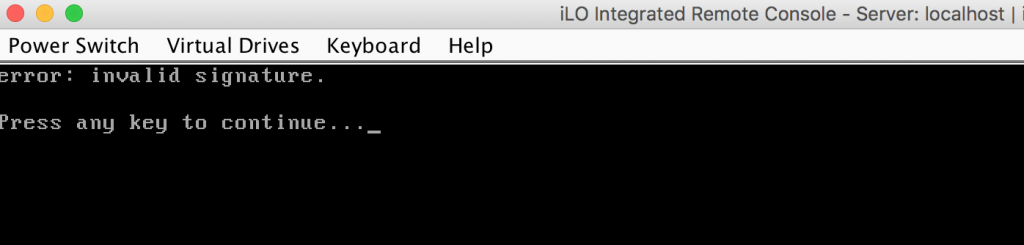

But, GNU GRUB failed to boot the Solaris.

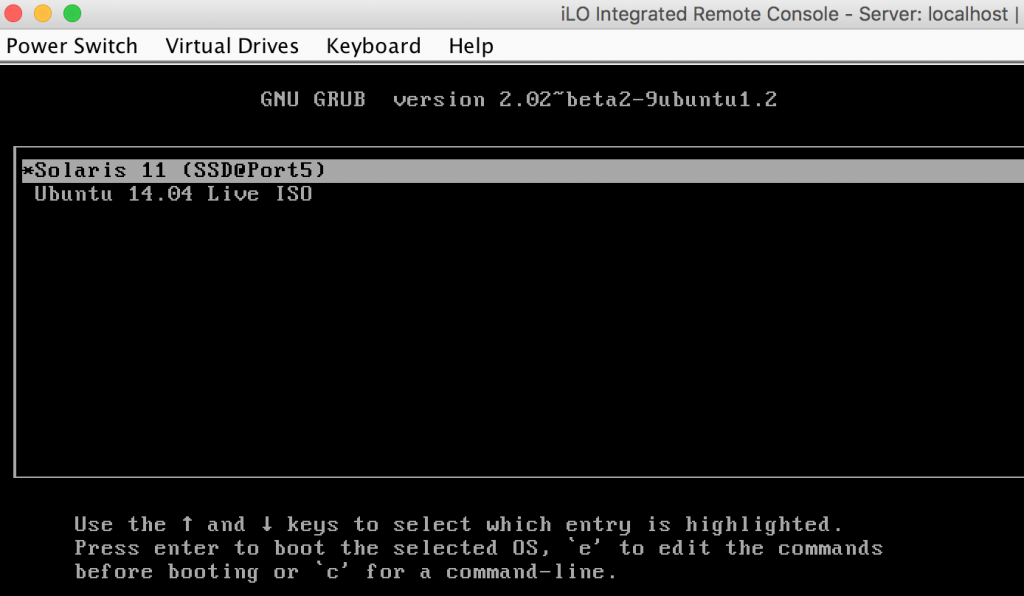

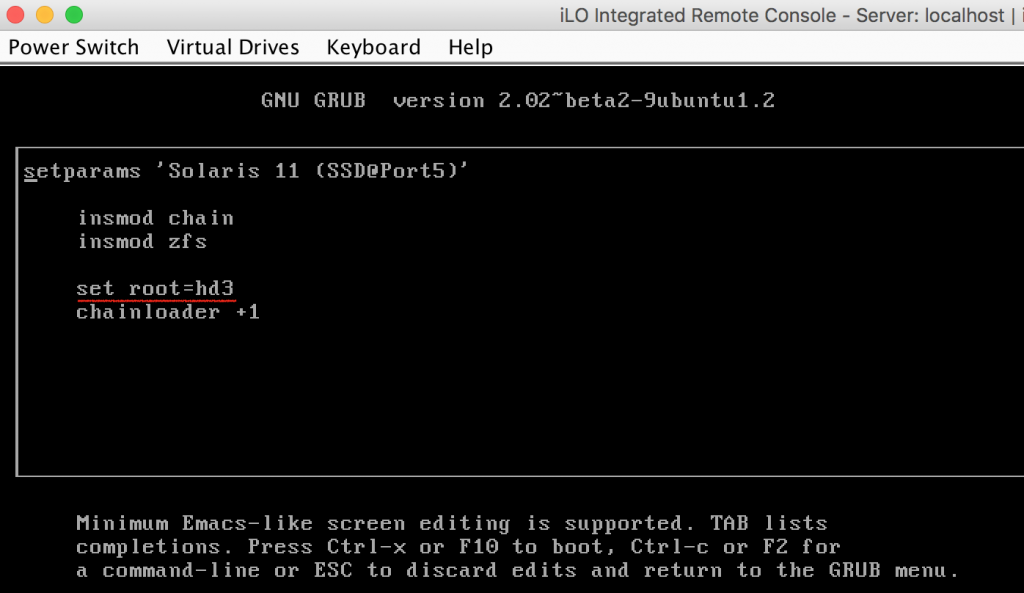

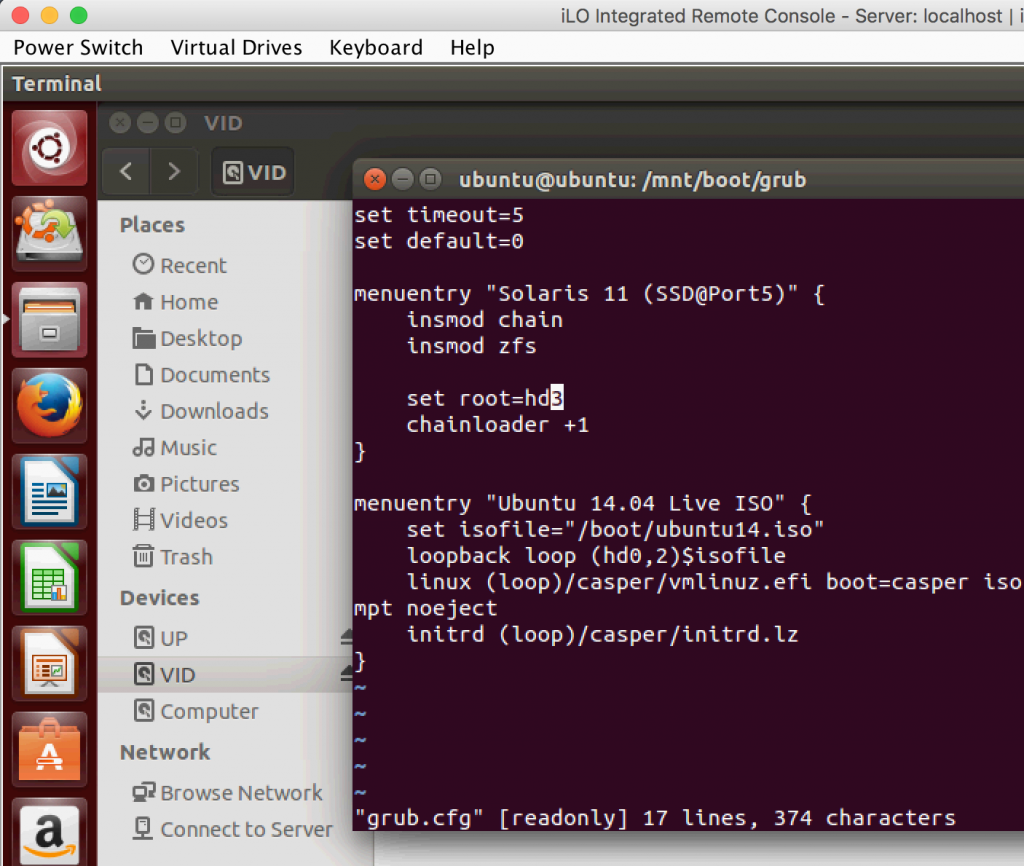

I installed Solaris 11 on my PLEXTOR SSD, which was connected the Port 5 (originally designed for Optical Drive) on MicroServer Gen8. Gen8 does not support boot directly from Port 5, but does support boot from internal Micro SD card. So I installed GNU GRUB on SD card, then boot the Solaris 11 which was installed on SSD at port 5.

Because I added a new disk, so the order of the SSD at port 5 had been changed from 3 to 4.

The fix is simple. Power off Gen8, remove the SD card, mount it to your system (eg: Macbook), update the order number of the SSD in GRUB configuration file at /boot/grub/grub.cfg, then re-install the SD card, boot successfully!

After logged into Solaris, list the zpool and its status

root@solar:~# zpool list NAME SIZE ALLOC FREE CAP DEDUP HEALTH ALTROOT rpool 118G 14.2G 104G 12% 1.00x ONLINE - sp 2.72T 315G 2.41T 11% 1.00x ONLINE - root@solar:~# zpool status pool: rpool state: ONLINE scan: none requested config: NAME STATE READ WRITE CKSUM rpool ONLINE 0 0 0 c3t4d0 ONLINE 0 0 0 errors: No known data errors pool: sp state: ONLINE scan: scrub repaired 0 in 3h51m with 0 errors on Sat Aug 5 13:02:29 2017 config: NAME STATE READ WRITE CKSUM sp ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 c3t0d0 ONLINE 0 0 0 c3t1d0 ONLINE 0 0 0 errors: No known data errors |

You can see here I have one pool named *sp* which is a mirror (mirror-0) which was build over two disks, c3t0d0 and c3t1d0.

Then use format to identify the new disk.

root@solar:~# format Searching for disks...done c2t0d0: configured with capacity of 1.83GB c2t0d1: configured with capacity of 254.00MB AVAILABLE DISK SELECTIONS: 0. c2t0d0 <hp iLO-Internal SD-CARD-2.10 cyl 936 alt 2 hd 128 sec 32> /pci@0,0/pci103c,330d@1d/hub@1/hub@3/storage@1/disk@0,0 1. c2t0d1 </hp><hp iLO-LUN 01 Media 0-2.10 cyl 254 alt 2 hd 64 sec 32> /pci@0,0/pci103c,330d@1d/hub@1/hub@3/storage@1/disk@0,1 2. c3t0d0 <ata -TOSHIBA DT01ACA3-ABB0-2.73TB> /pci@0,0/pci103c,330d@1f,2/disk@0,0 3. c3t1d0 </ata><ata -TOSHIBA DT01ACA3-ABB0-2.73TB> /pci@0,0/pci103c,330d@1f,2/disk@1,0 4. c3t2d0 </ata><ata -MB3000GDUPA-HPG4-2.73TB> /pci@0,0/pci103c,330d@1f,2/disk@2,0 5. c3t4d0 </ata><ata -PLEXTOR PX-128M5-1.05-119.24GB> /pci@0,0/pci103c,330d@1f,2/disk@4,0 </ata></hp> |

The disk with ID c3t2d0 is the one I just added to Gen8.

Attach the new disk into existing pool.

root@solar-1:~# zpool attach sp c3t1d0 c3t2d0 |

Check the status of pool again

root@solar-1:~# zpool status -v pool: rpool state: ONLINE scan: none requested config: NAME STATE READ WRITE CKSUM rpool ONLINE 0 0 0 c3t4d0 ONLINE 0 0 0 errors: No known data errors pool: sp state: DEGRADED status: One or more devices is currently being resilvered. The pool will continue to function in a degraded state. action: Wait for the resilver to complete. scan: resilver in progress since Sat Sep 30 01:30:55 2017 315G scanned 39.2G resilvered at 119M/s, 12.42% done, 0h39m to go config: NAME STATE READ WRITE CKSUM sp DEGRADED 0 0 0 mirror-0 DEGRADED 0 0 0 c3t0d0 ONLINE 0 0 0 c3t1d0 ONLINE 0 0 0 c3t2d0 DEGRADED 0 0 0 (resilvering) device details: c3t2d0 DEGRADED scrub/resilver needed status: ZFS detected errors on this device. The device is missing some data that is recoverable. see: http://support.oracle.com/msg/ZFS-8000-QJ for recovery errors: No known data errors |

Here it shows that the pool is *DEGRADED*, and it is resilvering, that means it is copying data from the existing disks to the new one, and it gives the size of data and estimation.

After the resilvering finished

root@solar-1:~# zpool status -v pool: rpool state: ONLINE scan: none requested config: NAME STATE READ WRITE CKSUM rpool ONLINE 0 0 0 c3t4d0 ONLINE 0 0 0 errors: No known data errors pool: sp state: ONLINE scan: resilvered 315G in 0h46m with 0 errors on Sat Sep 30 02:17:11 2017 config: NAME STATE READ WRITE CKSUM sp ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 c3t0d0 ONLINE 0 0 0 c3t1d0 ONLINE 0 0 0 c3t2d0 ONLINE 0 0 0 errors: No known data errors |

New disk had been added and synced successfully!